Title: Automated Planning of Whole-Body Motions for Everyday Household Chores with a Humanoid Service Robot

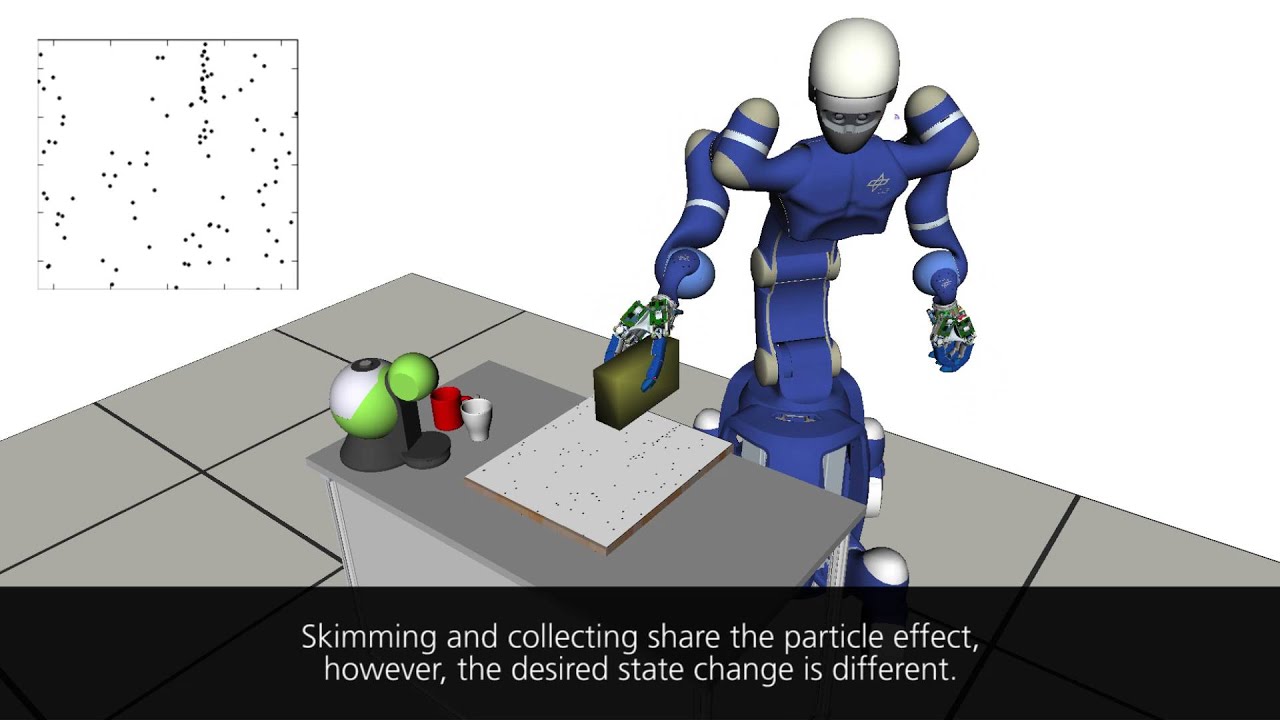

Title: Automated Planning of Whole-Body Motions for Everyday Household Chores with a Humanoid Service Robot Abstract: Wiping actions are required in many everyday household activities. Whether for skimming bread crumbs of a table top with a sponge or for collecting shards of a broken mug on the surface floor with a broom, future service robots are expected to master such manipulation tasks with high level of autonomy. In contrast to actions such as pick-and-place where immediate effects are observed, wiping tasks require a more elaborate planning process to achieve the desired outcome. The wiping motions have to autonomously adapt to different environment layouts and to the specifications of the robot and the tool involved. The work presented in this report proposes a strategy, called extended Semantic Directed Graph eSDG, for mapping wiping related semantic commands to joint motions of a humanoid robot. The medium (e.g. bread crumbs, water or shards of a broken mug) and the physical interaction parameters are represented in a qualitative model as particles distributed on a surface. eSDG combines information from the qualitative, the geometric state of the robot and the environment together with the specific semantic goal of the wiping task in order to generate executable and goal oriented Cartesian paths. The robot reachable workspace is used to reason about the wiping the partitioning of the tasks into smaller sub-tasks that can be executed from a static position of the robot base. For the eSDG path following problem a cascading structure of inverse kinematics solvers is developed, where the degree of freedom of the involved tool is exploited in favor of the wiping quality. The proposed approach is evaluated in a series of simulated scenarios. The results are validated by a real experiment on the humanoid robot Rollin’ Justin.

0 Comments